Autonomous optical velocimetry with a reinforcement learning agent

Reinforcement learning (RL) allows to learn optimal actions being performed in real-world environments. The RL agent explores and exploits policies and receives a reward specifically constructed to promote certain outcomes in the environment. The powerful feature of RL is that optimal actions can be inferred even in very high-dimensional state-spaces and/or action-spaces. Modern RL algorithms use deep artificial neural networks that can learn complex decision boundaries and skillfully switch from one action to the next depending on the current state of the environment.

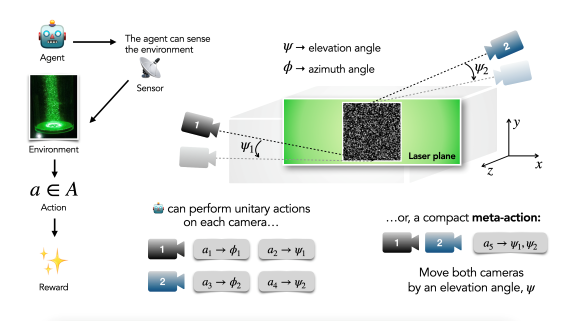

We use deep RL to construct autonomous optical velocimetry experimentation where the RL agent receives data from wind/water tunnel experiments in real-time. The RL agent learns to modify position of cameras to accomplish appropriate data collection, e.g., to capture flow features of most interest from the rich 3D flow domain. The training of RL is possible thanks to the novel CNN architecture developed in our group, Lightweight Image Matching Architecture (LIMA), which computes flow targets in parallel with running the experiments and can therefore provide immediate sensory information to the RL agent. We also use dimensionality reduction on-the-fly with training a RL agent to learn a more compact representation of the action-space. This allows for both faster training and faster response time since the agent performs a collective meta-action executed on multiple experimental components simultaneously (e.g., multiple cameras), instead of performing unitary actions on each component separately.